Artificial General Intelligence, abbreviated as AGI, often captures the imagination as the ultimate leap in technological evolution. Envision a machine not limited by specific tasks but displaying a rich cognitive flexibility akin to human thinking. This concept pushes beyond the familiar boundaries of Artificial Intelligence, which, until now, has predominantly excelled in narrowly defined tasks. AGI proposes a universe where machines think, learn, and adapt with unprecedented dexterity.

The journey towards AGI is riddled with both extraordinary promise and formidable challenges. It's a subject where hypothetical scenarios mingle with pressing ethical inquiries. What happens when a machine holds reasoning powers comparable to a human brain? How do we ensure these entities align with human values and safety protocols?

This exploration of Artificial General Intelligence navigates through its current state of development, the myriad of hurdles faced, and its potential impact on our world. It's a narrative rich with innovation and thoughtful debate, pushing us to ponder the role we wish AI to play in our collective future.

Understanding AGI

The quest to create Artificial General Intelligence, a form of AI capable of performing any intellectual task that a human being can do, is one of the most ambitious pursuits in the field of technology today. While current AI systems, known as narrow AI, are designed to perform specific tasks like language translation or image recognition, AGI aims to possess the ability to learn and apply knowledge across a diverse range of domains. This is where the dream of machines that can deeply think, reason, and solve novel problems emerges as a distinct possibility.

The concept of AGI embodies the vision of machines that are not just tools but independent entities with cognitive abilities that mirror our own. The journey to realizing AGI is fraught with complexities, requiring advances in multiple scientific disciplines including machine learning, neuroscience, and cognitive sciences. Researchers are striving to create architectures that can replicate the nuanced ways in which human brains process information. This requires building systems that can understand context, recognize patterns, and apply learned knowledge to novel situations in a way that is fluid and natural.

Interestingly, the journey towards AGI is not just a technical challenge, but a philosophical one. It raises profound questions about the very nature of intelligence and consciousness. What does it mean for a machine to think or be aware? These questions echo the quandaries that have fascinated humans for centuries. In the words of Ray Kurzweil, a renowned futurist, “We are trying to reverse-engineer the brain at a functional level, to better understand how intelligence actually works in the human mind.”

This pursuit of understanding extends beyond engineering, necessitating a reflection on the ethical dimensions and societal repercussions of creating such entities.

The complexity of achieving AGI also lies in the unpredictability of human intelligence itself. Unlike traditional algorithms that follow predefined instructions, AGI systems must adapt to continuously changing environments, learning in ways that are both dynamic and contextual. This requires an intricate balance of computational power, algorithmic innovation, and an ever-deepening understanding of human cognitive processes. One potential approach involves the development of neural network architectures inspired by the human brain, which mimic the interconnectedness and plasticity of neurons, aiming to cultivate deep learning processes that can evolve.

Interestingly, some experts believe AGI could significantly transform our world, potentially contributing to the resolution of complex global issues. However, there's a shadow side; the rise of AGI poses significant ethical concerns, including questions about autonomy and control. If a machine can truly think independently, who bears responsibility for its actions? These considerations demand a robust framework for governance and ethical guidelines, ensuring that the deployment of AGI aligns with humanity's collective values and safeguards our well-being.

Current Developments

The pursuit of Artificial General Intelligence is a captivating endeavor that has garnered the attention of researchers and tech enthusiasts worldwide. As of late 2023, the journey towards achieving true AGI continues to progress through groundbreaking research and experiments. Various institutions, ranging from academic hubs to leading tech corporations, are spearheading innovative projects to bridge this gap between machine learning and human-like intelligence.

One of the most notable players in the AGI space is OpenAI, which has been making significant strides with its projects like GPT series. The latest iteration, GPT-4, though technically narrow AI, has shown abilities previously believed to be years away. It performs well in areas such as language understanding and generation, despite still operating within predefined parameters rather than the autonomous learning and adaptability expected from AGI. Its success has fueled further interest and investment in developing more versatile AI frameworks that could eventually lead to AGI.

MIT and Stanford University are also at the forefront, developing models that incorporate elements of neuroscience to enhance AI's cognitive capacities. These projects aim to replicate the brain's unique processes through advanced computational techniques. By integrating insights from human cognition, researchers hope to produce AI systems that can mimic the nuanced learning patterns inherent in human minds. This can potentially result in machines capable of higher-order thinking skills, such as critical reasoning and ethical decision-making.

Significant advances have also been made in the field of hybrid models. These models attempt to link symbolic reasoning with neural networks, creating a hybrid system that blends the strength of structured logic with the adaptability of deep learning. This dual approach is promising for overcoming the limitations observed in current AI systems, which often struggle when faced with tasks requiring complex planning or abstract thought.

"The combination of symbolic reasoning with the power of neural networks could be the key to unlocking general intelligence in machines," states Dr. Alan McIntyre, a leading AI researcher at Carnegie Mellon University.

The pursuit of machine learning systems capable of self-improvement through trial and error is another exciting development. Such systems are trained using reinforcement learning techniques that mirror human learning through interaction. Notable companies in this endeavor, such as DeepMind, have showcased AI agents that learn and excel in environments without manual input, demonstrating a step closer to AGI.

Despite the significant progress, the path to AGI is still fraught with challenges. Researchers must overcome issues of scalability, resource allocation, and ethical governance. Ensuring these intelligent systems operate within safe and fair frameworks remains a priority. As these developments unfold, the conversation around AGI has become an exciting blend of scientific inquiry and philosophical debate, equally challenging and inspiring for all involved.

Potential Challenges

The road to achieving Artificial General Intelligence is paved with significant challenges, fraught with intricate difficulties both technical and ethical. One of the primary hurdles is ensuring that these machines possess a learning and reasoning capacity that rivals the human brain, something that involves not just processing speed but also sophisticated neural network structures. Researchers are actively pushing boundaries, trying to mimic the human brain's nuanced synaptic connections in a digital form. But it's not just about replicating intelligence; it's about crafting entities that can comprehend and autonomously respond to unprecedented situations.

Another critical challenge lies in resource allocation, as the computational power needed to test and run such highly advanced systems is immense. As these machines grow smarter, so does the demand on processors, requiring energy supplies that could further strain infrastructure. There's a balancing act between developing cutting-edge technology and ensuring it remains sustainable, something that gives pause to governments and companies alike. Indeed, the energy appetite of future AGI systems could dwarf our current technology set-ups, pushing the need for renewable solutions front and center in the conversation.

The issue of unpredictable outcomes is yet another thorny problem. As machines gain autonomy, predicting their decisions becomes exponentially tougher. Traditional algorithms offer limited foresight into AGI behavior, raising essential questions around control and oversight. Consider the complexity of setting boundaries: machines with such advanced capabilities might exhibit behaviors not covered by existing regulatory frameworks, demanding an overhaul in how we design laws and safety nets to keep them in check. This carries the burden of intense scrutiny from ethicists, lawmakers, and technologists.

"AGI calls for a radical rethinking of how we design systems that make decisions—it's about embedding a moral compass," remarked Yoshua Bengio, a pioneer in deep learning research.

Security risks also loom large, as AGI systems could become targets for malicious attacks. Imagine a scenario where an adversary exploits an AGI system’s learning algorithms, potentially leading to disastrous decisions or outcomes. Cybersecurity in the world of AGI isn't just preventative; it involves anticipating unforeseen vulnerabilities. This adds layers of complexity to already challenging security protocols, making the task akin to a high-stakes chess match. The risk of weaponization sees public and private sectors striving to develop failsafe measures that can prevent AI systems from being misused in warfare or sabotage.

Finally, perhaps the most formidable challenge is the ethical landscape. As we inch closer to creating machines that could rival human intellect, questions of moral responsibility and societal impact are pervasive. How do we safeguard human jobs in a world where machines can think and adapt faster, and perhaps better? The social contract between employer and employee will likely undergo significant changes, urging a re-evaluation of how society values work. It’s about reconciling technological advances with empathy and humanity to ensure the technology serves humanity rather than the other way around.

Impacts and Ethics

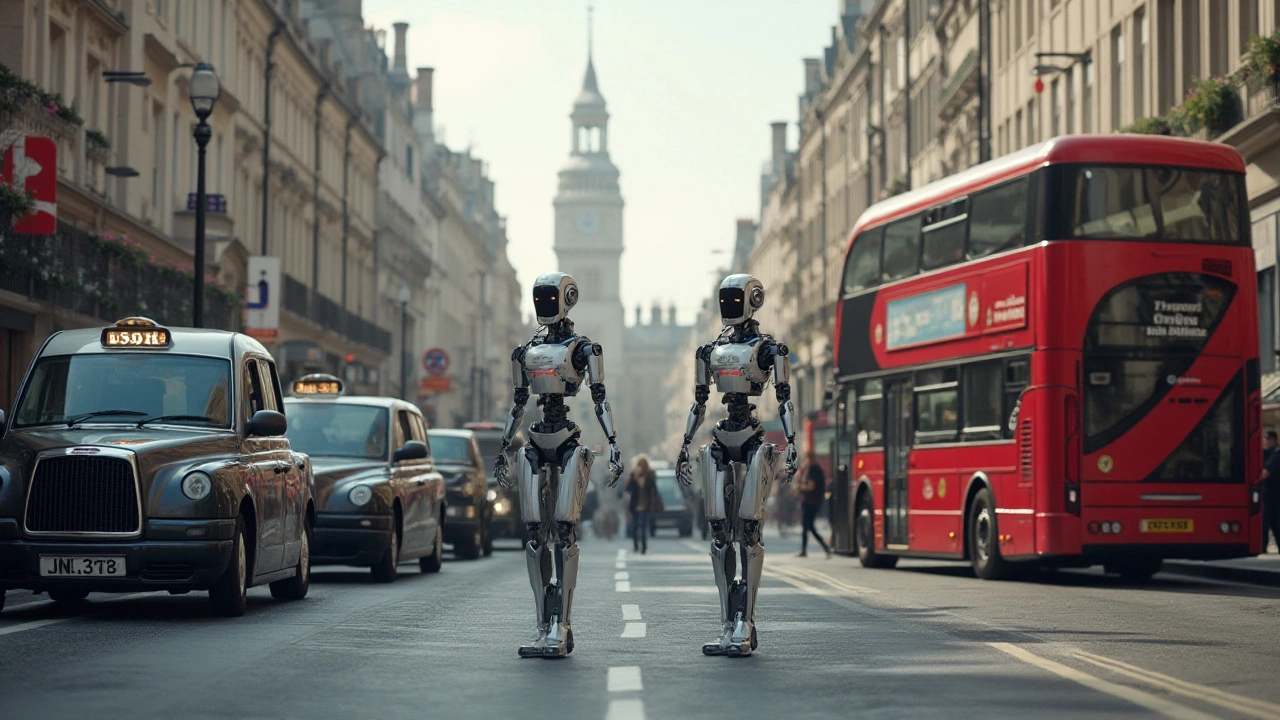

The advent of Artificial General Intelligence is set to stir transformative changes in the socio-economic fabric of our society. AGI's potential to perform tasks with human-like understanding and empathy could revolutionize industries, from healthcare and education to transport and customer service. Imagine a world where a digital entity understands the intricacies of human emotions and can provide mental health support or teach complex subjects with tailored pedagogy, all while drawing upon an extensive database of information and experiences. Such capabilities could bridge the gaps in areas where human resources are limited or overburdened, serving as a boon to global productivity and welfare.

However, the prospect of creating machines with human-like intelligence also introduces a spectrum of ethical dilemmas. A central concern is the risk of AGI surpassing human intelligence, leading to scenarios where machines might autonomously make decisions that impact human lives or even the ecological balance on Earth. The challenges multiply when considering the programming of ethical frameworks. How do developers encode a sense of morality and prioritize values such as empathy, justice, and autonomy within an AGI? A group of researchers once pointed out the critical assertion, stating, "The control problem might be the last problem we ever have to solve if AGI develops unchecked." It highlights the gravity and urgency of embedding a robust ethical framework within these systems.

Balancing the benefits and risks of AGI requires international cooperation and a well-structured governance model. Policymakers and technologists need to work in tandem to draft regulations ensuring ethical standards and transparency in AGI development. This involves establishing rigorous testing protocols and creating algorithms with audit trails to track decision-making processes. The process is intricate and demands relentless commitment to ongoing education and adaptation as AGI technology evolves. The key is maintaining a human-centric perspective — ensuring these systems serve humanity and not the other way around.

AGI also poses significant economic implications. As machines become capable of performing jobs traditionally held by humans, labor markets could face substantial disruptions. While automation might boost productivity and innovation, it equally demands a shift in workforce dynamics, creating a necessity for reskilling and upskilling initiatives. There is an optimistic view that AGI could liberate humans from mundane tasks, enabling them to engage in more creative and fulfilling endeavors. However, this requires a proactive approach to education systems and a reevaluation of economic models to ensure equity in the wealth generated through AGI advancements.

Moreover, as AGI systems become integrated into our daily lives, questions around data privacy, surveillance, and consent gain prominence. Who controls the data these intelligent systems collect, and how is it handled? Such questions are vital as they directly affect individual freedoms and societal trust. Navigating these ethical waters requires clear communication between technologists and citizens, fostering an environment where stakeholders can have informed discussions about the trade-offs and benefits of embracing AGI technologies.